Organizations have come to the realization that data is a core part of their strategy and a scalable distributed computing platform central to their technology investment. However, a challenge that big data practitioners face is what use case they should first implement in their journey towards realizing their big data strategy. The reality is, multiple items need to be addressed: choosing the right technology, requisite funding, and the right technical talent. However, identifying the right use case with defined success outcome is the most crucial point of starting a big data project.

Read More

Topics:

Cloud,

Data,

BI on Big Data,

Data Lake

Often times, customers approach me with questions around AtScale’s ability to integrate into the customer’s operational stack. Today, I want to highlight a component of AtScale’s Development Extensions called Webhooks.

A webhook (also called a web callback or HTTP push API) is a way for an application to provide other applications with real-time information. A webhook delivers data to other applications as certain events occur, meaning you get data immediately as opposed to a REST API which you would need to poll for data very frequently in order to get it close to real-time. This makes webhooks much more efficient for both provider and consumer.

Read More

Topics:

BI,

Analytics,

Data,

BI on Big Data,

best practices,

webhooks,

API

If you are like me, a Tableau fan, you’ve probably used Tableau for many years, attended numerous Tableau Conferences, and cheered with great enthusiasm when the engineers at Tableau demonstrated the latest and greatest enhancements to the software. You may also be very accustomed to creating your own calculations based on the row level data you are connected to. You enjoy the freedoms that Tableau offers.

Read More

Topics:

Tableau,

bi-on-hadoop,

Analytics,

BI on Big Data,

best practices

How valuable is an insight if you don’t know what’s driving it?

The investment in big data made in recent years by companies has been significant. Many are now looking to capitalize on the insights to be discovered in their expansive data lakes. Developing an analytic solution is a difficult and laborious process. More than a few projects have been abandoned long before any conclusive benefit is realized. Some efforts end due to constraints on time and money, others as a result of bad design or poor end user adoption. That last point is significant. You’re going to spend considerable resources to empower your decision makers. If you build it, and they don’t come, then what?

Read More

Topics:

Business Intelligence,

Big Data,

Semantic Layer,

Analytics,

BI on Big Data,

Data Lake,

data driven

Congratulations! Your data is controlled, aggregated and turbocharged in your AtScale virtual cube. You have Tableau to create remarkable visualizations. Your data is happy! But are your cube designers and business users too? For instance, did you know that centralizing calculations in your AtScale Virtual Cube eliminates TDE perpetuation, 3rd party ETL processes and version control headaches? For an enhanced AtScale experience, here are 5 Best Practices you should be implementing in order to maximize AtScale on Tableau.

Read More

Topics:

Business Intelligence,

Big Data,

BI,

Analytics,

BI on Big Data,

best practices

Data Lake Intelligence with AtScale

In my recent Data Lake 2.0 article I described how the worlds of big data and cloud are coming together to reshape the concept of the data lake. The data lake is an important element of any modern data architecture, and the data lake footprint will continue to expand. However, the data lake investment is only one part of delivering a modern data architecture. At Yahoo!, in addition to building a Hadoop-based data lake, we also needed to solve the problem of connecting traditional business intelligence workloads to this Hadoop data. Although the term “Data Lake” didn’t exist back then, we were solving the problem of: “How can you deliver an interactive BI experience on top of a scale-out Data Lake” - it turns out we were pioneers in delivering Data Lake Intelligence.

Our experiences and learnings from those initial efforts led to the architecture that sits at the core of the AtScale Intelligence Platform. Because AtScale has been built from the ground up to deliver business-friendly insights from the vast amounts of information in data lakes, AtScale has experienced tremendous success and adoption in enterprises ranging from financial services, to retail to digital media. With the release of AtScale 6.5, we’ve continued to build on and expand AtScale’s ability to uniquely deliver on the promise of Data Lake Intelligence. If this sounds like something you might be interested in knowing more about… keep reading!

Read More

Topics:

Business Intelligence,

bi-on-hadoop,

Big Data,

Cloud,

BI,

Analytics,

BI on Big Data,

Data Strategy,

data driven

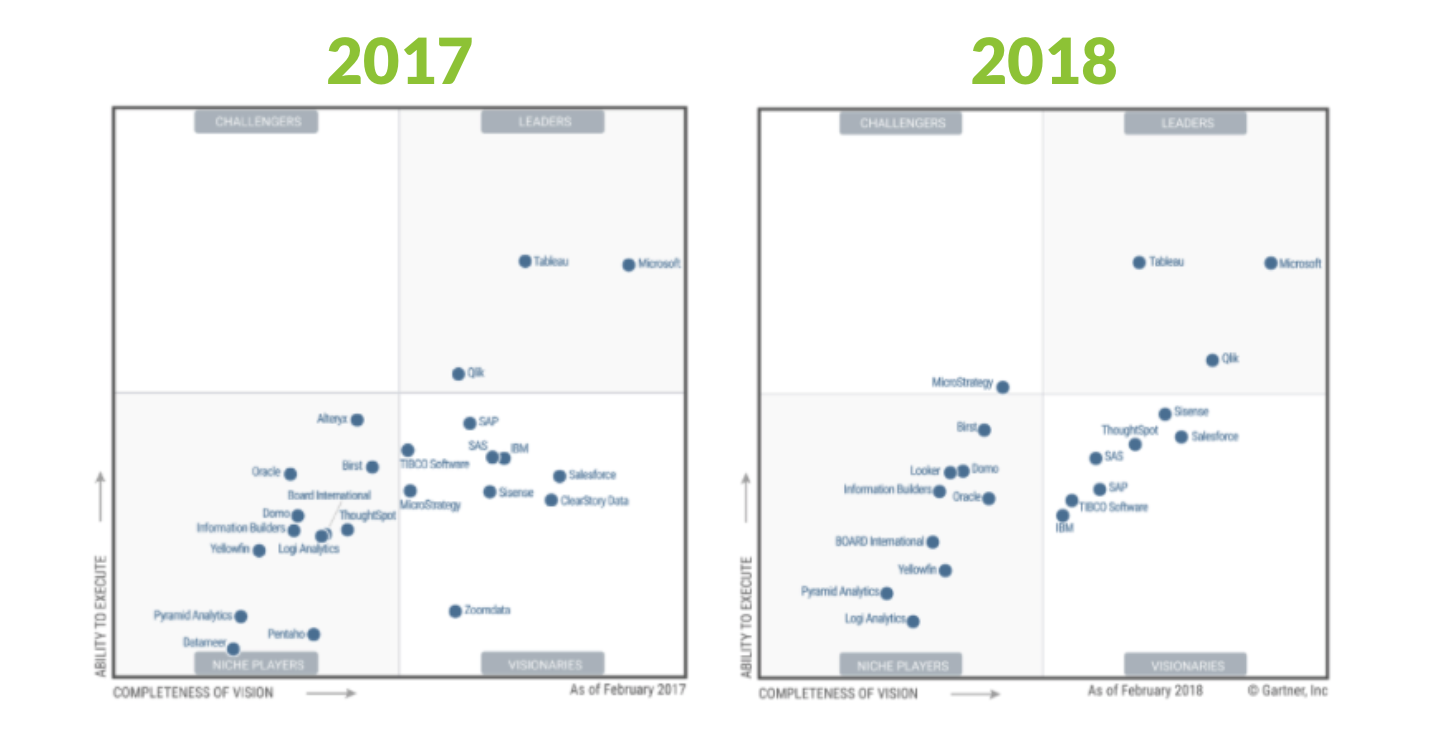

Yesterday, Gartner published the 2018 Magic Quadrant for Business Intelligence. The MQ research for BI has been in existence for close to a decade. It is THE document of reference for buyers of Business Intelligence technology.

At face value, not much seems to have changed...BUT, if you take a closer look, you'll notice that some of the biggest changes in the history of the Magic Quadrant just occurred....

Read More

Topics:

Tableau,

Gartner,

Business Intelligence,

GartnerBI,

BI on Big Data,

Microsoft,

Amazon,

microstrategy,

google

Additional contribution by: Santanu Chatterjee, Trystan Leftwich, Bryan Naden.

In the previous post we demonstrated how to model percentile estimates and use them in Tableau without moving large amounts of data. You may ask, "how accurate are the results and how much load is placed on the cluster?". In this post we discuss the accuracy and scaling properties of the AtScale percentile estimation algorithm.

To learn how to be a data driven orgazation, watch this webinar now!

Read More

Topics:

Hadoop,

bi-on-hadoop,

Analytics,

BI on Big Data,

percentiles

Additional contribution by: Santanu Chatterjee, Trystan Leftwich, Bryan Naden.

In the previous post, we discussed typical use cases for percentiles and the advantages of percentile estimates. In this post, we illustrate how to model percentile estimates with AtScale and use them from Tableau.

To learn how to be a data driven orgazation, check out this webinar!

Read More

Topics:

Hadoop,

bi-on-hadoop,

Analytics,

BI on Big Data,

percentiles

Additional contribution by: Santanu Chatterjee, Trystan Leftwich, Bryan Naden.

A new and powerful method of computing percentile estimates on Big Data is now available to you! By combining the well known t-Digest algorithm with AtScale’s semantic layer and smart aggregation features AtScale addresses gaps in both the Business Intelligence and Big Data landscapes. Most BI tools have features to compute and display various percentiles (i.e. medians, interquartile ranges, etc), but they move data for processing which dramatically limits the size of the analysis. The Hadoop-based SQL engines (Hive, Impala, Spark) can compute approximate percentiles on large datasets, however these expensive calculations are not aggregated and reused to answer similar queries. AtScale offers robust percentile estimates that work with AtScale’s semantic layer and aggregate tables to provide fast, accurate, and reusable percentile estimates.

In this three-part blog series we discuss the benefits of percentile estimates and how to compute them in a Big Data environment. Subscribe today to learn the best practices of percentile estimation on Big Data and more. Let's dive right in!

To learn how to be a data driven orgazation, check out this webinar!

Read More

Topics:

Hadoop,

bi-on-hadoop,

Analytics,

BI on Big Data,

percentiles