Part One - Modeling Many-to-Many Relationships Using Bridge Tables

Improve BI Performance Through Efficient Time-Specific Calculations

The more things change, the more they stay the same. There is tremendous push towards new platforms capable of dealing with massive amounts of data. Data is flowing at a scale once considered impossible, and the sources of data are more diverse than ever. It’s coming from ERP systems, General Ledgers, weather feeds, Supply Chain, IOT, and many other sources. And while AI and ML are discovering brand new ways to analyze data, there are critical business analytics that need to continue to process.

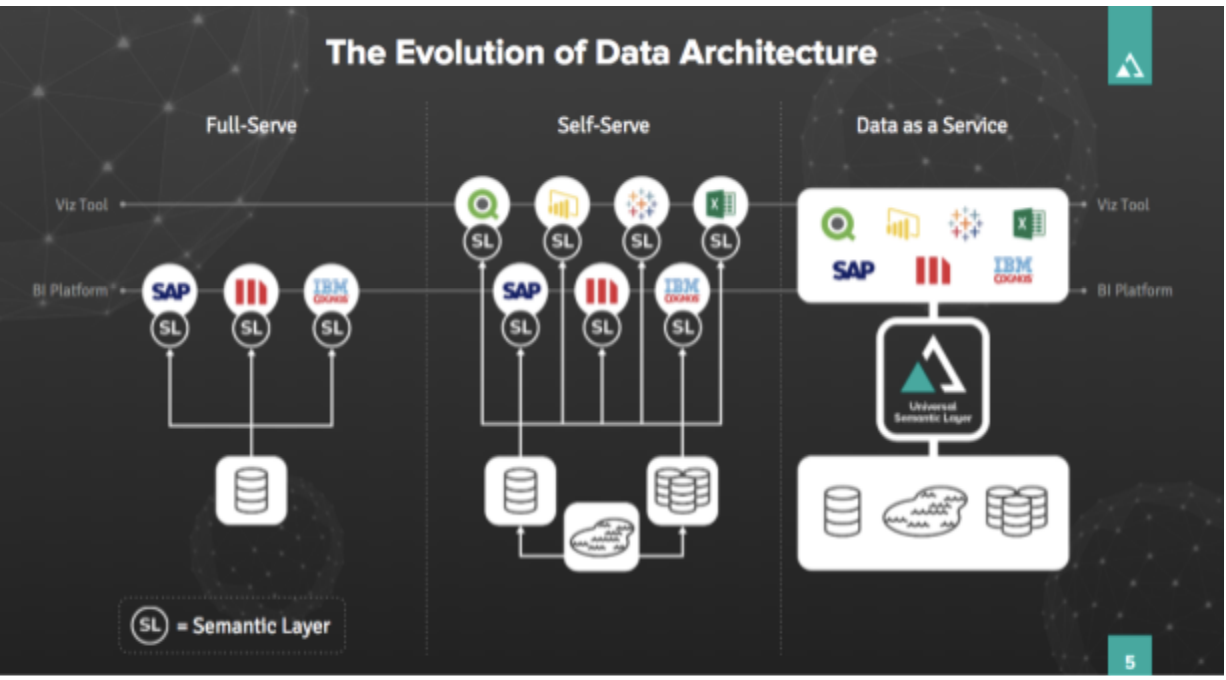

Enterprise data management has changed immensely over the past few decades…we’ve lived through data warehousing and data marts, struggled with scaling to huge data volumes and slow query performance, so on and so forth. As time progressed, true Big Data platforms like Hadoop emerged…and are now joined by Amazon Redshift, Google BigQuery, and others. In this age of limitless scalability of data volume, the “data lake” was born.

IBM and AtScale Agree: There are 34 Billion Reasons why Enterprises Should Move Data to the Cloud

There is a turf war going on today among technology providers to move their enterprise customers to the cloud. IBM believes so strongly that their future success resides in their ability to get enterprises to the cloud that they just announced the largest technology deal in history, buying RedHat for $34 billion. As IBM CEO Ginny Rometty pointed out, only 20% of enterprise data workloads have moved to the cloud. This statement highlights the fact that there is a massive opportunity among technology cloud providers to vie for the rapidly growing cloud storage market estimated to be nearly $90 billion dollars by 2022.

Topics: cloud transformation, BI Performance

3 Ways an Intelligent Data Fabric Improves Tableau Performance on an Enterprise Data Warehouse

In the fifteen years since Tableau was founded, it has emerged as one of the preeminent business intelligence software tools, to the extent that only Microsoft Excel is utilized by more enterprises to analyze data. Tableau’s popularity stems in many ways from its versatility. The tool allows users to combine data from different sources to produce reports that are in turn utilized to answer critical business questions. Tableau’s use cases span most industries, as well as most departments within a company. For example, a retail organization might have multiple teams employing Tableau to look at both sales data and core financial metrics, while a manufacturing firm might use the software to look at support and inventory data.

Topics: Tableau, cloud transformation

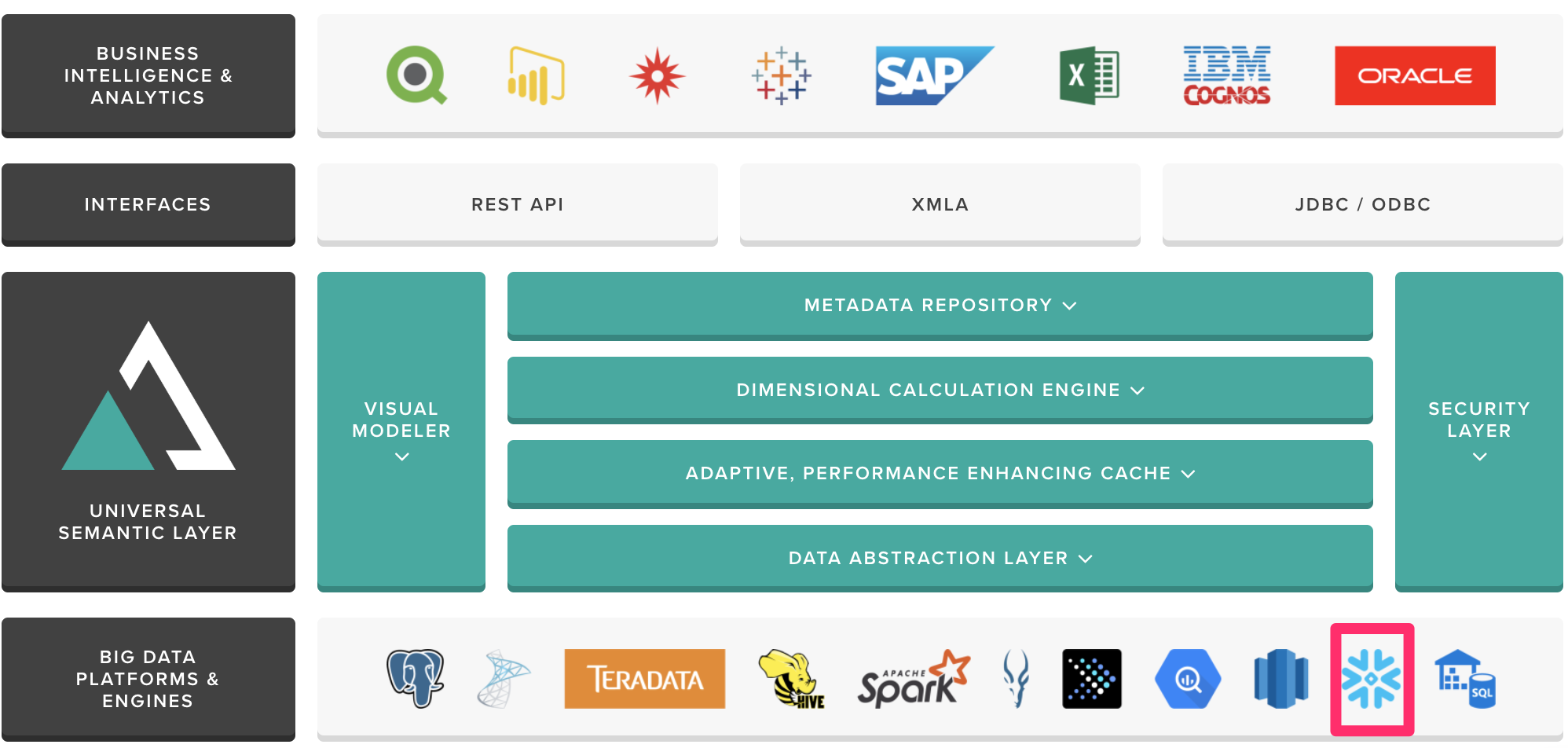

How AtScale Helps Enterprises Improve Performance and Cost on Snowflake

For businesses that with deal petabyte-scale data environments representing billions of individual items such as transactions or material components, the capacity to rapidly analyze that data is vital. Storing data in an on-premise warehouse is an increasingly unpopular option due to the obvious hardware costs and performance challenges. Cloud-based data warehouses have helped enterprises navigate these issues. Most Chief Data Officers at businesses with vast repositories of data are planning to move data to the cloud. Despite competition from large established organizations like Amazon, Google, and Microsoft, Snowflake has emerged as a popular choice with these stakeholders. The company has reached 1000 customers and just completed a $450 million dollar funding round that values the company at 3.5 Billion dollars, despite only emerging from stealth mode in 2014.

Topics: Snowflake

Disruptive technologies inevitably lead to the emergence of new job functions across all levels of an enterprise. The emergence of cloud computing is no different. Companies are positioning themselves to take advantage of the benefits cloud computing provides and with that comes the dawn of a new job title, the “Chief Data Officer”.

Two Ways that AtScale Delivers Faster Time to Insight: Dynamic BI Modeling and Improving Query Concurrency

It is no surprise that as businesses discover more ways to turn data into value, the appetite for storing large amounts of data is also increasing. Low storage costs enabled by cloud computing solutions such as Google BigQuery, Amazon Web Services, and Microsoft Azure allow enterprises to house vast quantities of data with minimal risk. However, analysts note that up to 75% of data remains unused, meaning most companies are not truly using data to run their businesses.

AtScale Enables Enterprises to Optimize Usage and Save on Costs with AWS Cloud Storage

In recent years, enterprise organizations have opted to migrate to or establish data lakes on cloud technologies to lower costs and increase performance. Cloud solutions’ pay-per-use pricing model and ability to scale on-demand, enables organizations to effectively analyze large data sets at a lower cost than if that data was stored in traditional on-premise solutions. Amazon Web Services’ ability to accommodate expanding large-scale data volumes and to process structured and unstructured data formats in the same environment, make the AWS ecosystem of technologies a highly popular set of solutions to address common data challenges.

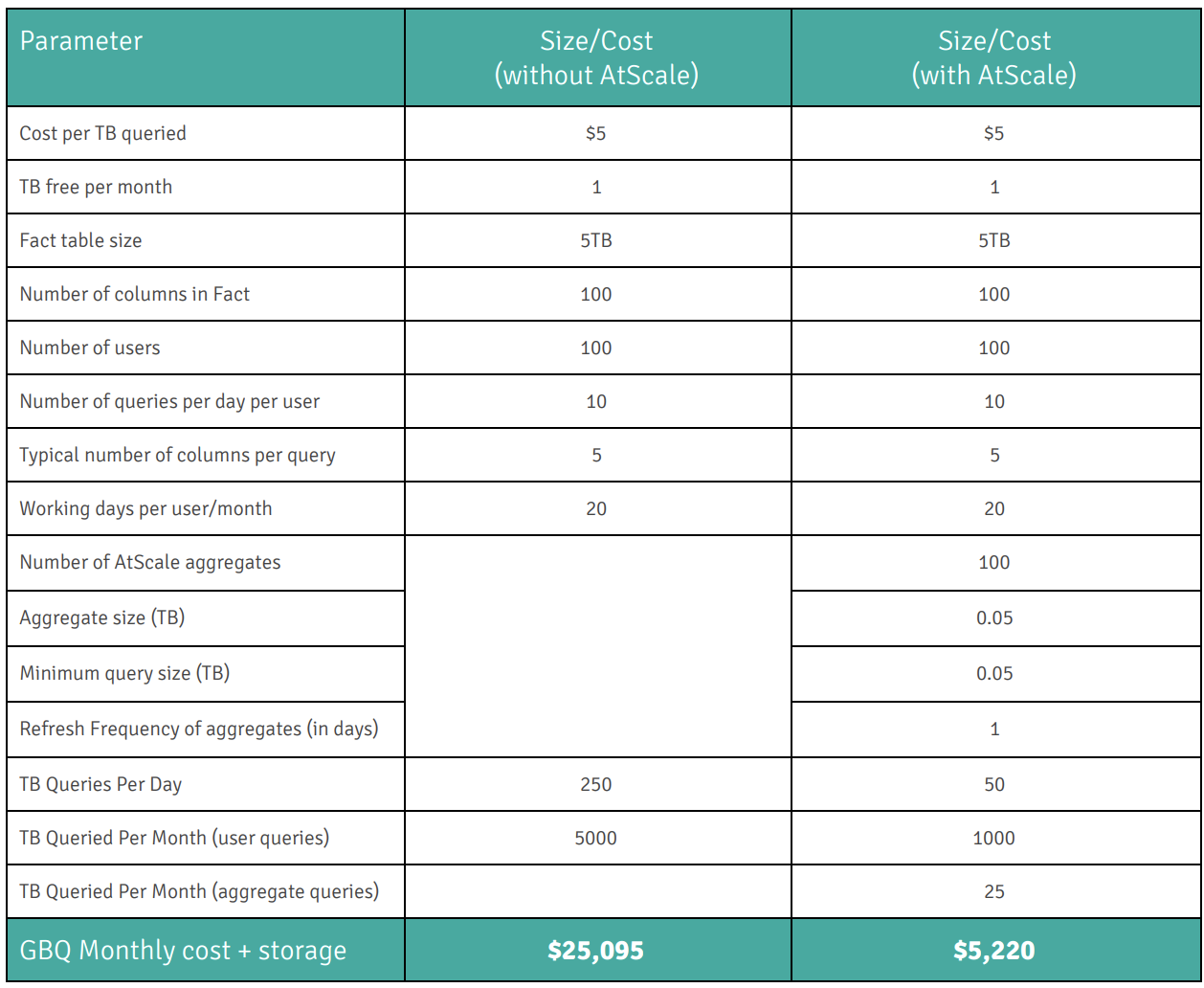

How AtScale uses Machine Learning to help Enterprises efficiently leverage Google BigQuery

In order to be successful, data driven organizations must analyze large data sets. This task requires computing capacity and resources that can vary in size depending on the kind of analysis or the amount of data being generated and consumed. Storing large data sets carries high installation and operational costs. Cloud computing, with its common pay-per-use pricing model and ability to scale based on demand, is the most suitable candidate for big data workloads by easily delivering elastic compute and storage capability.